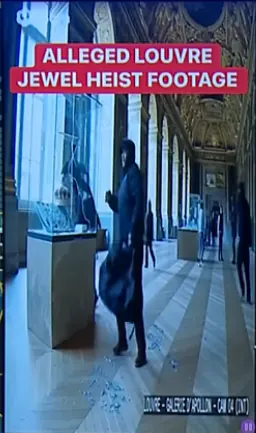

Viral "Louvre heist interior footage" is AI-generated

A viral vertical 'leaked CCTV' clip supposedly from the October 2025 Louvre robbery is an AI fabrication. Here is the complete breakdown of how we know.

Verdict

AI-generated fabrication of the Louvre heist

A viral vertical video claiming to be leaked Louvre CCTV is synthetic footage likely produced with OpenAI's Sora. No authenticated interior recording matches the frames in circulation.

Claim

A vertical "leaked CCTV" clip shows the real October 19, 2025 Louvre robbery from inside the Apollo Gallery.

Reality

The clip is a composited, AI-generated recreation that fails basic spatial, lighting, and watermark checks when compared with confirmed Louvre material.

Where it spread

- Instagram Reels reposted by crime-hype accounts

- TikTok stitch claiming "insider leak"

- X threads linking to downloads for "uncensored" footage

- YouTube Shorts channels summarising the heist

- Facebook groups discussing museum security failures

Watch the circulating clip

The viral video is preserved through Perma.cc. Load times can vary depending on the archive.

How the investigation unfolded

- Reverse video searches against reposts on Instagram, TikTok, and X surfaced identical frames linked to the same Perma.cc archive captured on 24 Oct 2025.

- Watermark inspection revealed the Sora watermark ghosted in reflections, a known signature when exporting from OpenAI's text-to-video model.

- Frame-by-frame artifact analysis showed physics-breaking reflections (a thief's hand floating without a mirror) and warped display cases.

- Geolocation checks using museum floor plans and Reuters graphics confirmed the Apollo Gallery layout does not match the AI reconstruction.

- Cross-checking official footage and press pool clips from AFP, Reuters, and France 24 found no corroborating interior handheld video.

What the real space looks like

Authentic press photography shows details the AI clip fails to reproduce, from the ceiling coffers to the vitrines lining Galerie d'Apollon.

Forensic findings at a glance

| Indicator | Evidence | Why it matters |

|---|---|---|

| Sora watermark | Ghosted "S" ripples visible on mirrored columns in TikTok downloads. | OpenAI Sora exports add a faint watermark; authentic CCTV from the Louvre would not. |

| Impossible reflection | Thief's hand reflection floats without a matching mirror panel. | Diffusion models frequently hallucinate mirror physics and object persistence. |

| Incorrect gallery geometry | Display-case spacing and ceiling coffers differ from verified Apollo Gallery photos. | Suggests the room is AI-imagined, not captured on-site; Louvre documentation proves the mismatch. |

Reality check: Location and timeline

Geolocation

Claimed location: Galerie d'Apollon, Musee du Louvre, Paris.

Status: Layout mismatch. Decorative ceiling bays and vitrine positions in the video diverge from official Louvre imagery.

Chronolocation

Claimed event date: 19 Oct 2025.

Status: Authorities have not released interior CCTV. Verified visuals are limited to exterior exits and police perimeter footage.

Risk assessment

- Confusion between verified reporting and fabricated "inside" footage muddies public understanding of the heist.

- Circulating AI clips in evidence-focused communities risks contaminating eyewitness discussions and chain-of-custody debates.

What would change this verdict?

Only an official release of interior CCTV from the Musée du Louvre, carrying verifiable provenance and matching the viral frames, would overturn the AI-generated conclusion. Short of that, the visual anomalies and Sora watermark keep the footage in the synthetic column.

Primary sources & references

- AFP: AI-generated clip of Louvre jewel heist spreads online (24 Oct 2025)

- Perma.cc: Archived Instagram embed of the viral clip (24 Oct 2025)

- Reuters: Thieves rob priceless jewels from Paris' Louvre (19 Oct 2025)

- Reuters graphics: How thieves broke into the Louvre (20 Oct 2025)

- France 24: Paris Louvre heist exterior footage (24 Oct 2025)

- Musée du Louvre: Official Apollo Gallery documentation

Keep teaching the debunk

When audiences encounter breaking-news clips, encourage them to check for watermarks, confirm location geometry, and compare with vetted press materials. Pair this case with hands-on practice in our AI Image Detector to reinforce the red flags.